How to push logs Observability

Precondition: Create Logs

Section titled “Precondition: Create Logs”First you have to create logs from your operating system, service, or application. Any log library can be used. There are two ways the logs can be handed over:

- Written to a log file or

- written to standard out.

Create an Agent to scrape and route logs

Section titled “Create an Agent to scrape and route logs”Once the logs exist, they have to be collected. We recommend using Grafana Alloy or Fluent Bit with the Loki plugin. Below you can find example configurations of those tools:

Example: Setup using Grafana Alloy

Section titled “Example: Setup using Grafana Alloy”In this example we collect logs from different sources of i.e. a VM.

Grafana Alloy collect all logs and send them to an Observability Loki instance.

Grafana Alloy configuration

Section titled “Grafana Alloy configuration”Configuration file of Grafana Alloy

Replace cluster, instanceId, username and password in the following configuration file.

- filename: config.alloy

local.file_match "node_logs" { path_targets = [{ // Monitor syslog to scrape node-logs __path__ = "/var/log/syslog", job = "node/syslog", node_name = sys.env("HOSTNAME"), cluster = "[cluster]", }]}

// loki.source.file reads log entries from files and forwards them to other loki.* components.// You can specify multiple loki.source.file components by giving them different labels.loki.source.file "node_logs" { targets = local.file_match.node_logs.targets forward_to = [loki.write.loki_instance.receiver]}

logging { level = "info" format = "json" write_to = [loki.write.loki_instance.receiver]}

loki.write "loki_instance" { endpoint { url = "https://logs.stackit[cluster].argus.eu01.stackit.cloud/instances/[instanceId]/loki/api/v1/push" basic_auth { username = "[username]" password = "[password]" } retry_on_http_429 = true }}Docker Compose

Section titled “Docker Compose”Docker Compose file

Use this docker-compose file to execute Grafana Alloy in a docker container. The Alloy UI and API is accessible over port 12345.

- filename: docker_compose.yml

services: alloy: image: grafana/alloy:latest container_name: alloy command: - run - --server.http.listen-addr=0.0.0.0:12345 - --storage.path=/var/lib/alloy/data - /etc/alloy/config.alloy ports: - "12345:12345" volumes: - ./config.alloy:/etc/alloy/config.alloy:ro - /var/run/docker.sock:/var/run/docker.sock:ro - alloy-data:/var/lib/alloy/data restart: unless-stoppedvolumes: alloy-data:Fluent Bit

Section titled “Fluent Bit”In the second example we want to scrape container logs and use Fluentbit for that (Grafana Alloy can also be used).

Fluent Bit Configuration

Section titled “Fluent Bit Configuration”Fluentbit config

Replace cluster, instanceId, username and password in the following configuration file.

[Output] Name loki Match * Tls on Host logs.stackit[cluster].argus.eu01.stackit.cloud Uri /instances/[instanceId]/loki/api/v1/push Port 443 Labels job=fluent-bit,env=${FLUENT_ENV} Http_User [username] Http_Passwd [password] Line_format json

[SERVICE] Parsers_File /fluent-bit/etc/parsers.conf # fluentbit needs a parser. This should point to the parser Flush 5 Daemon Off Log_Level debug

[FILTER] Name parser Match * Parser docker Key_name log

[INPUT] Name forward Listen 0.0.0.0 Port 24224Parsers Configuration

Section titled “Parsers Configuration”Parsers.conf

[PARSER] Name docker Format json Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L Time_Keep On Decode_Field_As json log log Decode_Field_As json level Decode_Field_As json ts Decode_Field_As json caller Decode_Field_As json msg msgDocker Compose file

Section titled “Docker Compose file”docker-compose.yaml

services: fluentbit: image: grafana/fluent-bit-plugin-loki:2.4.1-amd64 container_name: fluentbit_python_local volumes: - ./logging:/fluent-bit/etc # logging directory contains parsers.conf and fluent-bit.conf ports: - "24224:24224" - "24224:24224/udp"

app: build: context: ./app dockerfile: ./docker/local/Dockerfile image: app container_name: app privileged: true volumes: - ./:/app ports: - "3000:3000" command: sh -c 'air' logging: driver: fluentd # to make fluentbit work with docker this driver is neededInstall fluent-bit for Loki inside of customers Kubernetes cluster

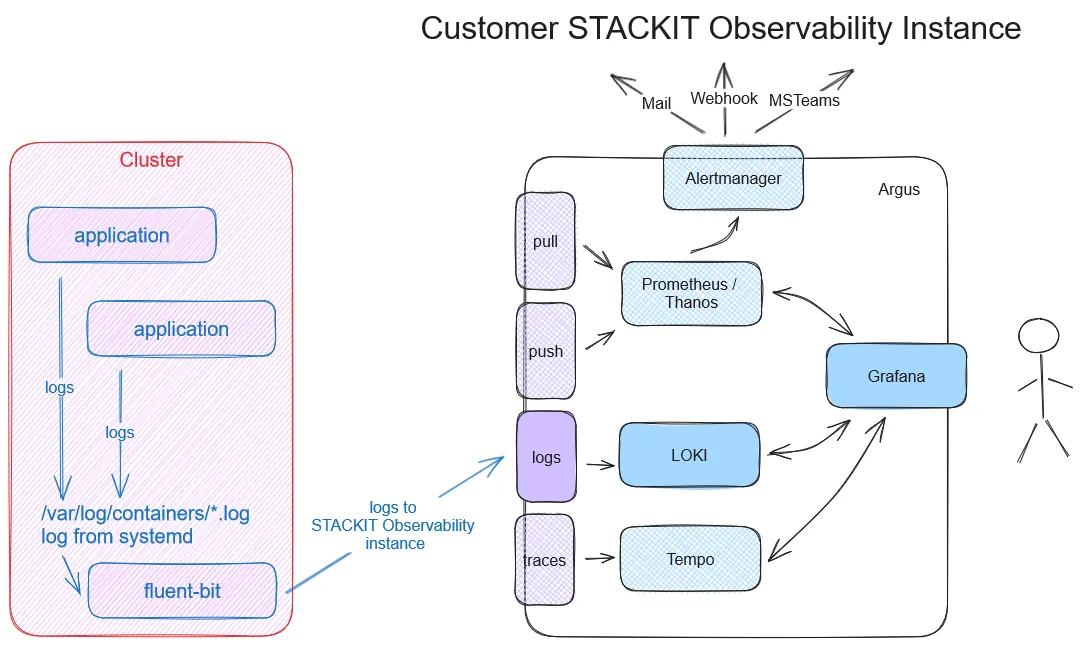

Section titled “Install fluent-bit for Loki inside of customers Kubernetes cluster”Overview

Section titled “Overview”There is the need to send logs of one or more applications to Loki. It is necessary to install fluent-bit in customer cluster to send output to the customers Observability instance.

It is possible to send logs to multiple STACKIT Observability Instances. Though url of fluent-bit output plugin have to contain instance ID.

Please check version of fluent-bit. Older versions than 2.2.2 does not support url’s with non standard path.

Installation in a Kubernetes cluster

Section titled “Installation in a Kubernetes cluster”- fluent-bit and Kubernetes: https://docs.fluentbit.io/manual/installation/downloads/kubernetes#installation

- fluent-bit loki output: https://docs.fluentbit.io/manual/pipeline/outputs/loki

- helm chart of fluent-bit https://github.com/fluent/helm-charts use version fluent-bit-0.43.0 or higher

- used docker image: https://hub.docker.com/r/fluent/fluent-bit use docker image version 2.2.2 or higher

fluent-bit deployment example

Section titled “fluent-bit deployment example”Deploy following files to your application cluster in a different namespace:

- Create a namespace

namespace.yaml

kind: NamespaceapiVersion: v1metadata: name: kube-logging- Create a serviceaccount

service-account.yaml

apiVersion: v1kind: ServiceAccountmetadata: name: fluent-bit namespace: kube-logging- Create a cluster role

role.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: fluent-bit-readrules:- apiGroups: [""] resources: - namespaces - pods verbs: ["get", "list", "watch"]- Create a role binding

role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: fluent-bit-readroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: fluent-bit-readsubjects:- kind: ServiceAccount name: fluent-bit namespace: kube-logging- Create a service account with following steps:

- open https://portal.stackit.cloud/

- select a project

- open Services in section Overview

- if necessary, create an Observability service

- click on instance name

- click on Create credentials in Credentials section

- name this credentials

- save credentials using button copy JSON

- Create a secret with your fluent-bit credentials

Replace username and password with your STACKIT credentials you generated the step before.

secret.yaml

apiVersion: v1kind: Secretmetadata: name: fluent-bit-secrets namespace: kube-loggingtype: OpaquestringData: username: "username" password: "password"- Create a configmap, containing all fluent-bit config files

You need to customize following fields in OUTPUT section of fluent-bit.conf of configmap.yaml file:

host: logs.stackit[cluster].argus.eu01.stackit.cloud

uri: /instances/[instanceId]/loki/api/v1/push

configmap.yaml

apiVersion: v1kind: ConfigMapmetadata: name: fluent-bit-config namespace: kube-logging labels: k8s-app: fluent-bitdata: fluent-bit.conf: | [SERVICE] Daemon Off Flush 1 Log_Level info Parsers_File /fluent-bit/etc/parsers.conf Parsers_File /fluent-bit/etc/conf/custom_parsers.conf HTTP_Server On HTTP_Listen 0.0.0.0 HTTP_Port 2020 Health_Check On

[INPUT] Name tail Path /var/log/containers/*.log Parser cri Tag kube.* Mem_Buf_Limit 5MB Skip_Long_Lines On

[INPUT] Name systemd Tag host.* Systemd_Filter _SYSTEMD_UNIT=kubelet.service Read_From_Tail On

[FILTER] Name kubernetes Match kube.* Merge_Log On Keep_Log Off K8S-Logging.Parser On K8S-Logging.Exclude On

[OUTPUT] name loki match * host logs.stackit[cluster].argus.eu01.stackit.cloud uri /instances/[instanceId]/loki/api/v1/push port 443 http_user ${FLUENT_USER} http_passwd ${FLUENT_PASS} tls on tls.verify on line_format json labels job=fluent-bit label_map_path /fluent-bit/etc/conf/labelmap.json

parsers.conf: | [PARSER] Name cri Format regex Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$ Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L%z

custom_parsers.conf: | [PARSER] Name docker Format json Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L Time_Keep On Decode_Field_As json log log Decode_Field_As json level Decode_Field_As json ts Decode_Field_As json caller Decode_Field_As json msg msg

labelmap.json: |- { "kubernetes": { "container_name": "container", "host": "node", "labels": { "app": "app", "release": "release" }, "namespace_name": "namespace", "pod_name": "instance" }, "stream": "stream" }- Create fluent-bit daemonset

daemonset.yaml

apiVersion: apps/v1kind: DaemonSetmetadata: name: fluent-bit namespace: kube-logging labels: app.kubernetes.io/name: fluent-bit app.kubernetes.io/instance: fluent-bit-lokispec: selector: matchLabels: k8s-app: fluent-bit-logging template: metadata: labels: k8s-app: fluent-bit-logging spec: serviceAccountName: fluent-bit terminationGracePeriodSeconds: 10 containers: - name: fluent-bit image: "fluent/fluent-bit:latest" imagePullPolicy: Always command: - /fluent-bit/bin/fluent-bit args: - --workdir=/fluent-bit/etc - --config=/fluent-bit/etc/conf/fluent-bit.conf ports: - name: http containerPort: 2020 protocol: TCP livenessProbe: httpGet: path: / port: http readinessProbe: httpGet: path: /api/v1/health port: http volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: journal mountPath: /journal readOnly: true - name: fluent-bit-config mountPath: /fluent-bit/etc/conf env: - name: FLUENT_USER valueFrom: secretKeyRef: name: fluent-bit-secrets key: username - name: FLUENT_PASS valueFrom: secretKeyRef: name: fluent-bit-secrets key: password volumes: - name: varlog hostPath: path: /var/log - name: journal hostPath: path: /var/log/journal - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: fluent-bit-config configMap: name: fluent-bit-config tolerations: - key: node-role.kubernetes.io/master effect: NoScheduleTest application deployment

Section titled “Test application deployment”- Create a small test application to produce logs At first we need a volume for nginx test application

mypvc.yaml

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: my-pvcspec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi # Request 1 Gigabyte of storage- Now we are able to deploy a nginx webserver

nginx-deployment.yaml

apiVersion: apps/v1kind: Deploymentmetadata: name: nginx-deploymentspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 volumeMounts: - name: data mountPath: /usr/share/nginx/html resources: limits: memory: "256Mi" cpu: "200m" requests: memory: "128Mi" cpu: "100m" livenessProbe: httpGet: path: / port: 80 initialDelaySeconds: 15 periodSeconds: 10 readinessProbe: httpGet: path: / port: 80 initialDelaySeconds: 5 periodSeconds: 5 volumes: - name: data persistentVolumeClaim: claimName: my-pvc- Check pods after deployment

$ kubectl get namespacesNAME STATUS AGEdefault Active 6dkube-logging Active 4d3hkube-node-lease Active 6dkube-public Active 6dkube-system Active 6d

$ kubectl get pods -n kube-loggingNAME READY STATUS RESTARTS AGEfluent-bit-c9b8d 1/1 Running 0 23h

$ kubectl get pods -n defaultNAME READY STATUS RESTARTS AGEnginx-deployment-58fc999d7b-56jrq 1/1 Running 0 9m2snginx-deployment-58fc999d7b-5gkwz 1/1 Running 0 9m2snginx-deployment-58fc999d7b-gxbfr 1/1 Running 0 9m2sCheck Logs

Section titled “Check Logs”Now you can open Grafana and check the logs with the Loki data source.