So übertragen Sie Logs an Observability

Voraussetzung: Logs erstellen

Abschnitt betitelt „Voraussetzung: Logs erstellen“Zunächst müssen Sie Logs aus Ihrem Betriebssystem, Service oder Ihrer Anwendung erzeugen. Dabei kann jede beliebige Logging-Bibliothek verwendet werden.

Es gibt zwei Möglichkeiten, wie die Logs bereitgestellt werden können:

- Schreiben einer Log-Datei.

- Schreiben nach Standard Output.

Agent zum Erfassen von Logs erstellen

Abschnitt betitelt „Agent zum Erfassen von Logs erstellen“Sobald die Logs vorhanden sind, müssen sie eingesammelt werden. Wir empfehlen die Verwendung von Grafana Promtail oder Fluent Bit mit dem Loki-Plugin.

Nachfolgend finden Sie Beispielkonfigurationen für diese Tools:

Beispiel: Setup mit Promtail

Abschnitt betitelt „Beispiel: Setup mit Promtail“In diesem Beispiel schreibt eine Anwendung Logs in ihr internes Verzeichnis /app/logs.

Wir binden ein Volume ./logs ein, das dem lokalen Dateisystem entspricht.

Da Promtail Zugriff auf die Log-Dateien benötigt, ist dieses gemeinsame Volume erforderlich.

In Promtail binden wir die Logs in dessen Logging-Verzeichnis /var/log ein und konfigurieren es so, dass alle Dateien mit der Endung *.log erfasst und an die Observability-Loki-Instanz unter der entsprechenden URL exportiert werden.

Promtail-Konfiguration

Abschnitt betitelt „Promtail-Konfiguration“server: http_listen_port: 9080 grpc_listen_port: 0 log_level: "debug"

positions: filename: /tmp/positions.yaml

clients: # To get the URL, make a GET request to the observability API with the path /v1/projects/{projectId}/instances/{instanceId}. The URL can be found in the "logsPushUrl" key of the "instance" dictionary in the response - url: https://\[username\]:\[password\]@logs.stackit.argus.eu01.stackit.cloud/instances/\[instanceId\]/loki/api/v1/push

scrape_configs: - job_name: system static_configs: - targets: - localhost labels: job: varlogs __path__: /var/log/*.log app: appDocker Compose Datei

Abschnitt betitelt „Docker Compose Datei“version: "3"

services: app: build: context:./app dockerfile:./docker/local/Dockerfile image: app container_name: app privileged: true volumes: -./logs:/app/logs

promtail: image: grafana/promtail:2.4.1 volumes: -./logs:/var/log -./config::/etc/promtail/ ports: - "9080:9080" command: -config.file=/etc/promtail/promtail.yamlFluent Bit

Abschnitt betitelt „Fluent Bit“Im zweiten Beispiel möchten wir Container-Logs erfassen und dafür Fluent Bit verwenden (Promtail kann ebenfalls genutzt werden).

Fluent-Bit-Konfiguration

Abschnitt betitelt „Fluent-Bit-Konfiguration“[Output] Name loki Match * Tls on Host logs.stackit\[cluster\].argus.eu01.stackit.cloud Uri /instances/\[instanceId\]/loki/api/v1/push Port 443 Labels job=fluent-bit,env=${FLUENT_ENV} Http_User $FLUENT_USER Http_Passwd $FLUENT_PASS Line_format json

[SERVICE] Parsers_File /fluent-bit/etc/parsers.conf # fluentbit needs a parser. This should point to the parser Flush 5 Daemon Off Log_Level debug

[FILTER] Name parser Match * Parser docker Key_name log

[INPUT] Name forward Listen 0.0.0.0 Port 24224Parsers Konfiguration

Abschnitt betitelt „Parsers Konfiguration“[PARSER] Name docker Format json Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L Time_Keep On Decode_Field_As json log log Decode_Field_As json level Decode_Field_As json ts Decode_Field_As json caller Decode_Field_As json msg msgDocker Compose Datei

Abschnitt betitelt „Docker Compose Datei“version: "3"

services: fluentbit: image: grafana/fluent-bit-plugin-loki:2.4.1-amd64 container_name: fluentbit_python_local volumes: -./logging:/fluent-bit/etc # logging directory contains parsers.conf and fluent-bit.conf ports: - "24224:24224" - "24224:24224/udp"

app: build: context:./app dockerfile:./docker/local/Dockerfile image: app container_name: app privileged: true volumes: -./:/app ports: - "3000:3000" command: sh -c 'air' logging: driver: fluentd # to make fluentbit work with docker this driver is neededFluent Bit für Loki im Kubernetes-Cluster des Kunden installieren

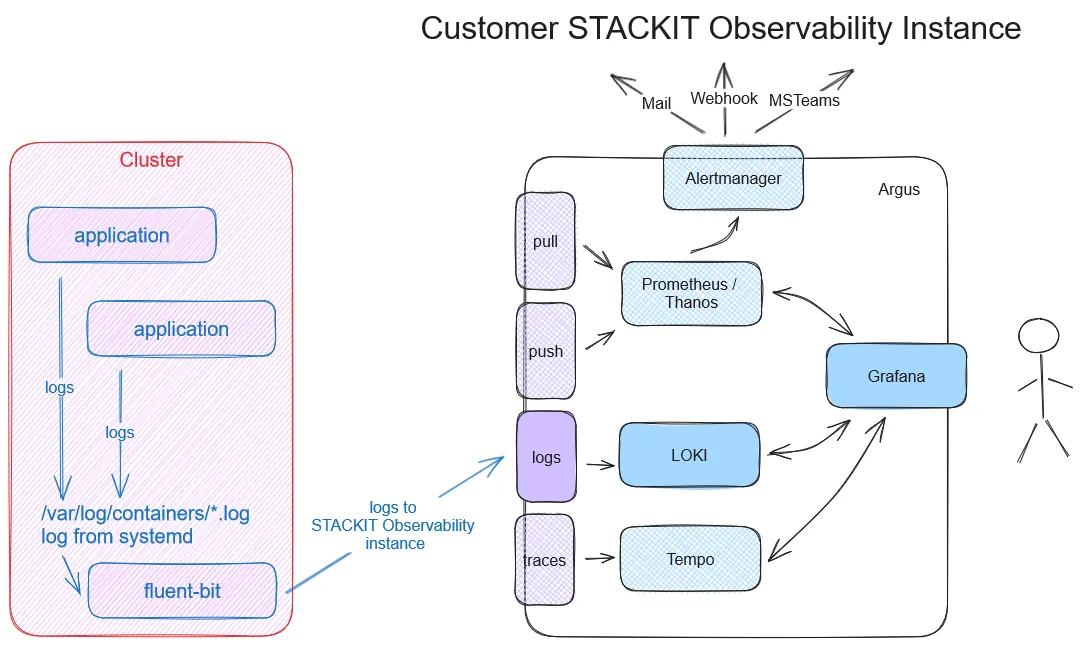

Abschnitt betitelt „Fluent Bit für Loki im Kubernetes-Cluster des Kunden installieren“Übersicht

Abschnitt betitelt „Übersicht“Es besteht die Anforderung, Logs einer oder mehrerer Anwendungen an Loki zu senden. Dafür ist es notwendig, Fluent Bit im Cluster des Kunden zu installieren,

um die Ausgabe an die Observability-Instanz des Kunden zu übertragen.

Es ist möglich, Logs an mehrere STACKIT-Observability-Instanzen zu senden. Dabei muss die URL des Fluent-Bit-Output-Plugins die jeweilige Instanz-ID enthalten.

Bitte prüfen Sie die verwendete Fluent-Bit-Version. Versionen älter als 2.2.2 unterstützen keine URLs mit nicht standardisiertem Pfad.

Installation in einem Kubernetes-Cluster

Abschnitt betitelt „Installation in einem Kubernetes-Cluster“- Fluent Bit und Kubernetes: https://docs.fluentbit.io/manual/installation/downloads/kubernetes#installation

- Fluent-Bit-Loki-Output: https://docs.fluentbit.io/manual/pipeline/outputs/loki

- Helm-Chart von Fluent Bit: https://github.com/fluent/helm-charts (Version fluent-bit-0.43.0 oder höher verwenden)

- Verwendetes Docker-Image: https://hub.docker.com/r/fluent/fluent-bit (Docker-Image-Version 2.2.2 oder höher verwenden)

Beispiel für ein Fluent-Bit-Deployment

Abschnitt betitelt „Beispiel für ein Fluent-Bit-Deployment“Stellen Sie die folgenden Dateien in einem separaten Namespace in Ihrem Anwendungscluster bereit:

- Namespace erstellen namespace.yaml

kind: NamespaceapiVersion: v1metadata: name: kube-logging- Service Account erstellen service-account.yaml

apiVersion: v1kind: ServiceAccountmetadata: name: fluent-bit namespace: kube-logging- Cluster Role erstellen role.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: fluent-bit-readrules:- apiGroups: [""] resources: - namespaces - pods verbs: ["get", "list", "watch"]- Role Binding erstellen role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: fluent-bit-readroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: fluent-bit-readsubjects:- kind: ServiceAccount name: fluent-bit namespace: kube-logging- Fluent-Bit-DaemonSet erstellen daemonset.yaml

apiVersion: apps/v1kind: DaemonSetmetadata: name: fluent-bit namespace: kube-logging labels: app.kubernetes.io/name: fluent-bit app.kubernetes.io/instance: fluent-bit-loki app.kubernetes.io/version: "2.2.2"spec: selector: matchLabels: k8s-app: fluent-bit-logging template: metadata: labels: k8s-app: fluent-bit-logging spec: containers: - name: fluent-bit image: "fluent/fluent-bit:latest" imagePullPolicy: Always command: - /fluent-bit/bin/fluent-bit args: - --workdir=/fluent-bit/etc - --config=/fluent-bit/etc/conf/fluent-bit.conf ports: - name: http containerPort: 2020 protocol: TCP livenessProbe: httpGet: path: / port: http readinessProbe: httpGet: path: /api/v1/health port: http volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: journal mountPath: /journal readOnly: true - name: fluent-bit-config mountPath: /fluent-bit/etc/conf terminationGracePeriodSeconds: 10 volumes: - name: varlog hostPath: path: /var/log - name: journal hostPath: path: /var/log/journal - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: fluent-bit-config configMap: name: fluent-bit-config serviceAccountName: fluent-bit tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule- Erstellen Sie eine ConfigMap, die alle Fluent-Bit-Konfigurationsdateien enthält.

Erstellen Sie ein Service Account mit den folgenden Schritten:

- Öffnen Sie https://portal.stackit.cloud/,

- wählen Sie ein Projekt aus,

- öffnen Sie im Bereich „Overview“ den Punkt „Services“,

- erstellen Sie bei Bedarf einen Observability-Service,

- klicken Sie auf den Instanznamen,

- klicken Sie im Bereich „Credentials“ auf „Create credentials“,

- vergeben Sie einen Namen für die Zugangsdaten,

- speichern Sie die Zugangsdaten über die Schaltfläche „Copy JSON“,

- die folgenden Felder sind für den nächsten Schritt erforderlich:

logsPushUrl:

https://logs.stackit\[cluster\].argus.eu01.stackit.cloud/instances/\[instanceId\]/loki/api/v1/pushusername: $FLUENT_USER

password: $FLUENT_PASS

Sie müssen die folgenden Felder im Abschnitt OUTPUT der Datei fluent-bit.conf in configmap.yaml anpassen:

host: logs.stackit[cluster].argus.eu01.stackit.cloud

uri: /instances/[instanceId]/loki/api/v1/push

http_user: $FLUENT_USER

http_passwd: $FLUENT_USER

configmap.yaml

apiVersion: v1kind: ConfigMapmetadata: name: fluent-bit-config namespace: kube-logging labels: k8s-app: fluent-bitdata: fluent-bit.conf: | [SERVICE] Daemon Off Flush 1 Log_Level info Parsers_File /fluent-bit/etc/parsers.conf Parsers_File /fluent-bit/etc/conf/custom_parsers.conf HTTP_Server On HTTP_Listen 0.0.0.0 HTTP_Port 2020 Health_Check On

[INPUT] Name tail Path /var/log/containers/*.log Parser cri Tag kube.* Mem_Buf_Limit 5MB Skip_Long_Lines On

[INPUT] Name systemd Tag host.* Systemd_Filter _SYSTEMD_UNIT=kubelet.service Read_From_Tail On

[FILTER] Name kubernetes Match kube.* Merge_Log On Keep_Log Off K8S-Logging.Parser On K8S-Logging.Exclude On

[OUTPUT] name loki match * host logs.stackit\[cluster\].argus.eu01.stackit.cloud uri /instances/\[instanceId\]/loki/api/v1/push port 443 http_user $FLUENT_USER http_passwd $FLUENT_PASS tls on tls.verify on line_format json labels job=fluent-bit label_map_path /fluent-bit/etc/conf/labelmap.json

parsers.conf: | # CRI Parser [PARSER] # http://rubular.com/r/tjUt3Awgg4 Name cri Format regex Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$ Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L%z

custom_parsers.conf: | [PARSER] Name docker Format json Time_Key time Time_Format %Y-%m-%dT%H:%M:%S.%L Time_Keep On Decode_Field_As json log log Decode_Field_As json level Decode_Field_As json ts Decode_Field_As json caller Decode_Field_As json msg ms

labelmap.json: |- { "kubernetes": { "container_name": "container", "host": "node", "labels": { "app": "app", "release": "release" }, "namespace_name": "namespace", "pod_name": "instance" }, "stream": "stream" }Test Applikation Bereitstellung

Abschnitt betitelt „Test Applikation Bereitstellung“- Erstellen Sie eine kleine Testanwendung, um Protokolle zu erstellen.

Zunächst benötigen wir ein Volume für die nginx-Testanwendung.

mypvc.yaml

apiVersion: v1kind: PersistentVolumeClaimmetadata: name: my-pvcspec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi # Request 1 Gigabyte of storage- Jetzt können wir einen Nginx-Webserver bereitstellen.

nginx-deployment.yaml

apiVersion: apps/v1kind: Deploymentmetadata: name: nginx-deploymentspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 resources: limits: memory: "256Mi" # Maximum memory allowed cpu: "200m" # Maximum CPU allowed (200 milliCPU) requests: memory: "128Mi" # Initial memory request cpu: "100m" # Initial CPU request livenessProbe: httpGet: path: / # The path to check for the liveness probe port: 80 # The port to check on initialDelaySeconds: 15 # Wait this many seconds before starting the probe periodSeconds: 10 # Check the probe every 10 seconds readinessProbe: httpGet: path: / # The path to check for the readiness probe port: 80 # The port to check on initialDelaySeconds: 5 # Wait this many seconds before starting the probe periodSeconds: 5 # Check the probe every 5 seconds volumes: - name: data persistentVolumeClaim: claimName: my-pvc # Name of the Persistent Volume Claim- Pods nach der Bereitstellung überprüfen

$ kubectl get namespacesNAME STATUS AGEdefault Active 6dkube-logging Active 4d3hkube-node-lease Active 6dkube-public Active 6dkube-system Active 6d

$ kubectl get pods -n kube-loggingNAME READY STATUS RESTARTS AGEfluent-bit-c9b8d 1/1 Running 0 23h

$ kubectl get pods -n defaultNAME READY STATUS RESTARTS AGEnginx-deployment-58fc999d7b-56jrq 1/1 Running 0 9m2snginx-deployment-58fc999d7b-5gkwz 1/1 Running 0 9m2snginx-deployment-58fc999d7b-gxbfr 1/1 Running 0 9m2sLogs prüfen

Abschnitt betitelt „Logs prüfen“Nun können Sie Grafana öffnen und die Logs über die Datenquelle Loki prüfen.